Table of contents

Load Balancer (LB)

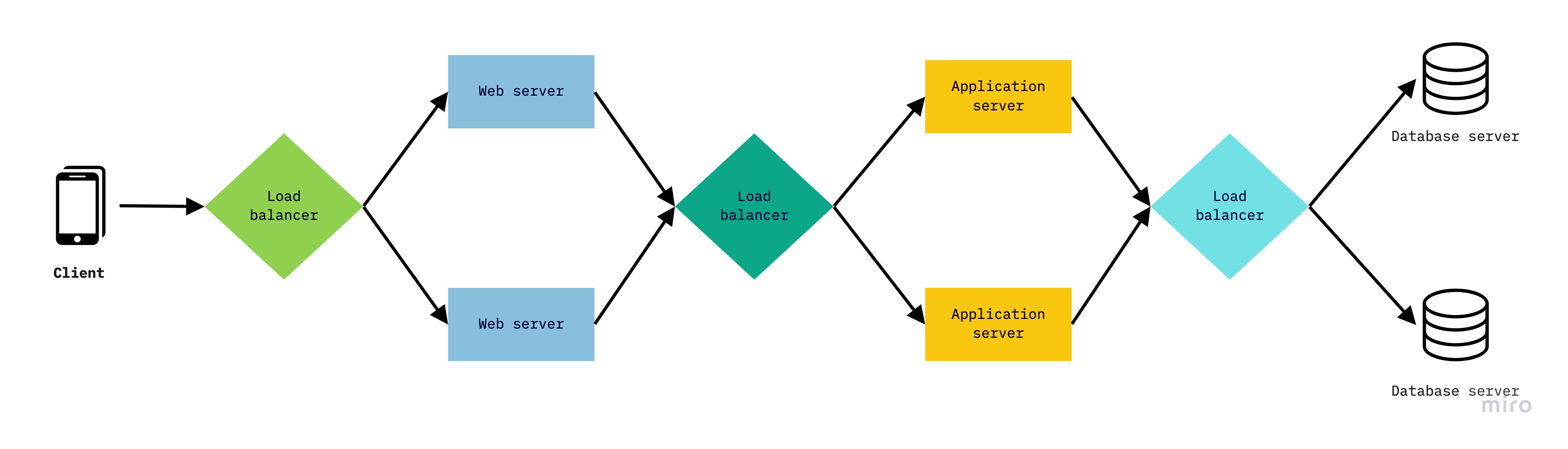

Determine where in the system you need load balancing.

|

|---|

| Load balancer examples |

Load Balancers (LBs) are reverse proxies. A reverse proxy is a server that sits between clients and servers and acts on behalf of the servers. One that acts on behalf of the client is called forward proxy.

Jobs of LB

-

Accepts incoming traffic via one or more listeners.

A listeners is a process that checks for connection request configured with a protocol and port number for connections from clients to the LB (frontends) and from the LB to its registered targets like instances, IP addresses, containers (backends).

-

Monitors the health of its registered targets and routes traffic only to healthy targets.

When it detects an unhealthy target, it stops routing traffic to that target. It also handles connection draining, so that in-flight requests have time to terminate when the target is deregistering or unhealthy. This is useful for seamlessly doing maintenance tasks like software upgrades with minimal disruption

-

Monitors metrics such as throughput, CPU and memory utilisation of its targets.

-

Dynamically scales itself and its backends, based on auto-scaling rules.

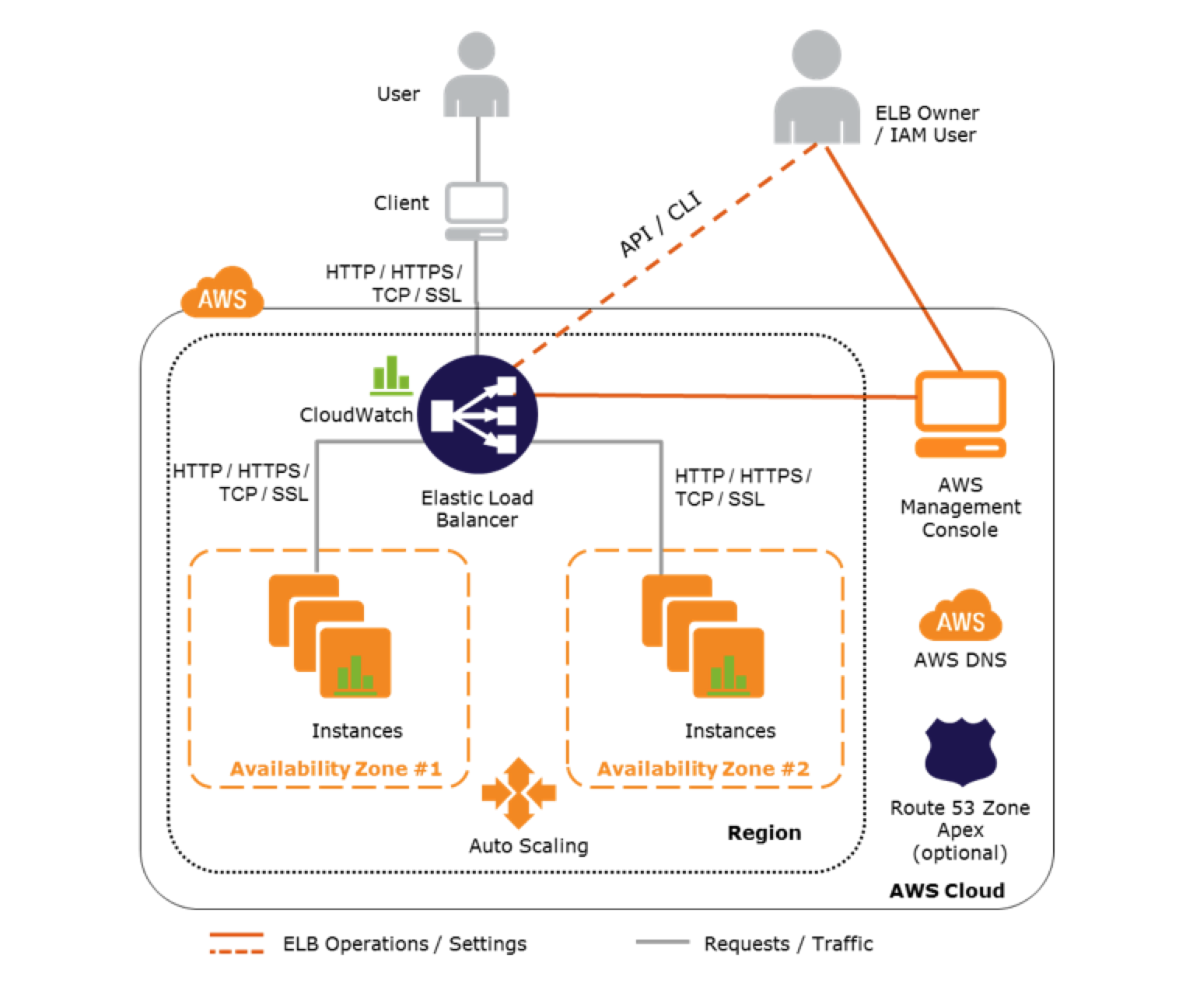

An Elastic Load Balancer (ELB) is designed to be highly-available across multiple AZs and abstracts away scaling itself.

-

Can handle connection multiplexing.

Requests from multiple clients on multiple front-end connections can be routed to a given target through a single backend connection.

-

Can offload user authentication, including federated providers.

-

Can offloads encryption and decryption (SSL termination) from its registered targets.

Benefits of LB

- Makes the system more fault-tolerant

- Actively monitors the system

- Is a line of defence against network attacks

- Improves latency and reduces the load on its targets

How LB routes requests

-

The client resolves the LB’s domain name using a DNS server. DNS servers return one or more IP addresses of the LB nodes.

These IP addresses can be remapped quickly in response to changing traffic. The DNS entry also specifies the TTL (e.g. 60 seconds). For lengthy operations, such as file uploads, the idle timeout for connections should be adjusted to ensure they have time to complete.

-

The client determines which IP address to use to send requests to the load balancer.

-

The LB node receives the request, selects a healthy registered target and sends the request to the target using its private IP address.

How LB selects a target

-

Least Connection Method or Least Outstanding Requests (LOR)

Directs traffic to the server with the fewest active connections (or pending, unfinished requests). Useful when there are a large number of persistent client connections which are unevenly distributed between the servers. AWS has recently introduced LOR support for ALB.

-

Least Response Time Method

Directs traffic to the server with the fewest active connections and the lowest average response time.

-

Least Bandwidth Method

Selects the server that is currently serving the least amount of traffic measured in megabits per second (Mbps).

-

Round Robin Method

Cycles through a list of servers and sends each new request to the next server. When it reaches the end of the list, it starts over at the beginning. It is most useful when the servers are of equal specification and there are not many persistent connections. Round Robin is the most commonly used algorithm.

-

Weighted Round Robin Method

Handles servers with different processing capacities. Each server is assigned a weight (an integer value that indicates the processing capacity). Servers with higher weights receive new connections before those with less weights and servers with higher weights get more connections than those with less weights.

6) IP Hash: a hash of the IP address of the client is calculated to redirect the request to a server.

LB alternative

An alternative to classic load balancers is a service mesh architecture, explained in Define your API. A service mesh improves service-to-service communication.

A service mesh is a separate entity that manages each inbound and outbound request to control security (encryption), identity, observability (logging, tracing), error handling and load balancing outside application code.

It is implemented as a proxy server which runs alongside each replica of a service, on the same VM, host, pod etc.

A service mesh architecture is suitable for organisations where all teams are aligned on their architecture, have control over all the services (i.e. no external third-party services) and are able to share the same Certificate Authority (CA) between services (although multiple CAs can be used).

- DNS load balancing, see DNS routing options

- An external LB for web servers

- An internal LB for application servers

- An internal LB for databases

- A self-balancing service mesh architecture

Determine the OSI layer the LB operates at.

|

|---|

| Open Systems Interconnection Model |

-

Network Load Balancer (NLB) at Layer 4

- For ultra-low latency and high throughput handles millions of requests per second

- Handles sudden volatile traffic patterns

- Supports static Elastic IP address, useful for IP whitelisting

- Supports UDP for IoT, gaming, streaming, media transfers

-

Application Load Balancer (ALB) at Layer 7

- Has visibility over the HTTP request to create custom routing based on request headers, query params, source IP CIDR etc.

- Serve multiple domains with SNI and custom routing based on path

- Supports session affinity (sticky sessions) to route request from the same client to the same target

- Can send a fixed response from the ALB, without a backend

- Supports offloading user authentication to the ALB

- Can perform redirects directly from the ALB, e.g.

HTTP→HTTPS - Supports slow start to give new targets some time to warm up before receiving requests

- Supports Lambdas as targets

Setup health checks for targets.

The LB periodically pings its targets to check their health and avoid sending traffic to unhealthy instances. A health check can be configured with:

- a protocol, e.g. HTTP or HTTPs

- a port

- a path, e.g.

/healthy - an interval between checks

- a threshold to count the number of consecutive successful checks

- a timeout for failed checks

- a success status code

Setup auto-scaling for target groups.

|

|---|

| LB auto scaling targets |

Multiple targets (instances, IP addresses, containers etc.) can be grouped together into a Target Group, for easier configuration. Each target group can have auto-scaling policies for scaling in and out, that specify how to add and remove instances when demand changes. A LB can route requests to multiple Target Groups.

Auto-scaling provides high-availability e.g. across AZs and redundancy. It also performs status checks on instances which can be combined with the health checks from the LB.

- Setup steady scaling with a minimum and desired count of targets

- Setup scheduled scaling for predictable load changes, e.g. daily peaks or seasonal

- Setup dynamic scaling in response of metrics like CPU utilisation above a threshold

Check if logging and monitoring is enabled.

- Enable metrics

- Enable access logs

- Enable request tracing